How a team of scientists worked to inoculate a million users against misinformation

Jon Roozenbeek, University of Cambridge; Sander van der Linden, University of Cambridge, and Stephan Lewandowsky, University of BristolFrom the COVID-19 pandemic to the war in Ukraine, misinformation is rife worldwide. Many tools have been designed to help people spot misinformation. The problem with most of them is how hard they are to deliver at scale.

But we may have found a solution. In our new study we designed and tested five short videos that “prebunk” viewers, in order to inoculate them from the deceptive and manipulative techniques often used online to mislead people. Our study is the largest of its kind and the first to test this kind of intervention on YouTube. Five million people were shown the videos, of which one million watched them.

We found that not only do these videos help people spot misinformation in controlled experiments, but also in the real world. Watching one of our videos via a YouTube ad boosted YouTube users’ ability to recognise misinformation.

As opposed to prebunking, debunking (or fact-checking) misinformation has several problems. It’s often difficult to establish what the truth is. Fact-checks also frequently fail to reach the people who are most likely to believe the misinformation, and getting people to accept fact-checks can be challenging, especially if people have a strong political identity.

Studies show that publishing fact-checks online does not fully reverse the effects of misinformation, a phenomenon known as the continued influence effect. So far, researchers have struggled to find a solution that can rapidly reach millions of people.

The big idea

Inoculation theory is the notion that you can forge psychological resistance against attempts to manipulate you, much like a medical vaccine is a weakened version of a pathogen that prompts your immune system to create antibodies. Prebunking interventions are mostly based on this theory.

Most models have focused on counteracting individual examples of misinformation, such as posts about climate change. However, in recent years researchers including ourselves have explored ways to inoculate people against the techniques and tropes that underlie much of the misinformation we see online. Such techniques include the use of emotive language to trigger outrage and fear, or the scapegoating of people and groups for an issue they have little-to-no control over.

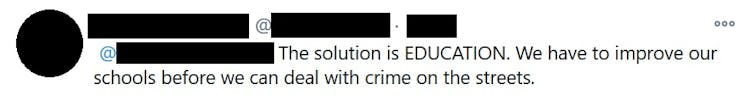

An example of a social media post (which we used as one of the stimuli in our study) that makes use of a false dichotomy (or false dilemma), a commonly used manipulation technique.

Online games such as Cranky Uncle and Bad News were among the first attempts to try this prebunking method. There are several advantages to this approach. You don’t have to act as the arbiter of truth as you don’t have to fact-check specific claims you see online. It allows you to side-step emotive discussions about the credibility of news sources. And perhaps most importantly, you do not need to know what piece of misinformation will go viral next.

A scalable approach

But not everyone has the time or motivation to play a game – so we collaborated with Jigsaw (Google’s research unit) on a solution to reach more of these people. Our team developed five prebunking videos, each lasting less than two minutes, which aimed to immunise viewers against a different manipulation technique or logical fallacy. As part of the project, we launched a website where people can watch and download these videos.

Screenshots from one of the videos.

We first tested their impact in the lab. We ran six experiments (with about 6,400 participants in total) in which people watched one of our videos or an unrelated control video about freezer burn. Afterwards, within 24 hours of viewing the video, they were asked to evaluate a series of (unpublished) social media content examples that either did or did not make use of misinformation techniques. We found that people who saw our prebunking videos were significantly less liable to manipulation than the control participants.

But findings from lab studies do not necessarily translate to the real world. So we also ran a field study on YouTube, the world’s second-most visited website (owned by Google), to test the effectiveness of the video interventions there.

For this study we focused on US YouTube users over 18 years old who had previously watched political content on the platform. We ran an ad campaign with two of our videos, showing them to around 1 million YouTube users. Next we used YouTube’s BrandLift engagement tool to ask people who saw a prebunking video to answer one multiple-choice question. The question assessed their ability to identify a manipulation technique in a news headline. We also had a control group, which answered the same survey question but didn’t see the prebunking video. We found the prebunking group was 5-10% better than the control group at correctly identifying misinformation, showing that this approach improves resilience even in a distracting environment like YouTube.

One of the prebunking videos (“false dichotomies”)

Our videos would cost less than 4p per video view (this would cover YouTube advertising fees). As a result of this study, Google is going to run an ad campaign using similar videos in September 2022. This campaign will be run in Poland and the Czech Republic to counter disinformation about refugees within the context of the Russia-Ukraine war.

When you are trying to build resilience, it is useful to avoid being too direct in telling people what to believe, because that might trigger something called psychological reactance. Reactance means that people feel their freedom to make decisions is being threatened, leading to them digging their heels in and rejecting new information. Inoculation theory is about empowering people to make their own decisions about what to believe.

At times, the spread of conspiracy theories and false information online can be overwhelming. But our study has shown it is possible to turn the tide. The more that social media platforms work together with independent scientists to design, test and implement scalable, evidence-based solutions, the better our chances of making society immune to the onslaught of misinformation.

Jon Roozenbeek, Postdoctoral Fellow, Psychology, University of Cambridge; Sander van der Linden, Professor of Social Psychology in Society and Director, Cambridge Social Decision-Making Lab, University of Cambridge, and Stephan Lewandowsky, Chair of Cognitive Psychology, University of Bristol

This article is republished from The Conversation under a Creative Commons license. Read the original article.

If you liked what you just read and want more of Our Brew, subscribe to get notified. Just enter your email below.

Related Posts

Pope Leo Xiv is the First Member of the Order of St. Augustine to Be Elected Pope – but Who Are the Augustinians?

Jun 04, 2025

People Say They Prefer Stories Written by Humans Over AI-generated Works, Yet New Study Suggests That’s Not Quite True

Apr 02, 2025

What are AI Hallucinations? Why AIs Sometimes Make Things Up

Mar 25, 2025